It is a summary of almost all the things which you have to take care while doing migrating from your monolithic. Each step as discussed here can be a separate blog in itself and so my intention here is not to go deep into each aspect of the migration but to give you a list of all important things which you have to take care and the best approach you can take for each one of them.

Breaking Monolithic

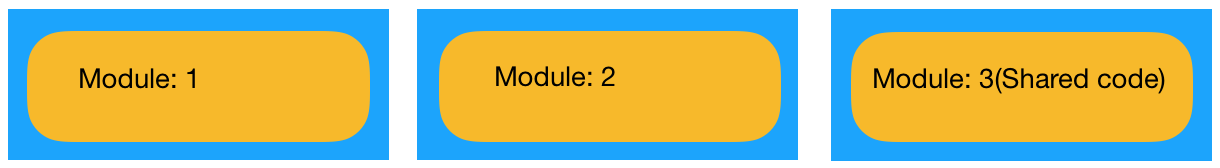

To start with let us assume that our monolithic consists of multiple modules which are getting deployed as a separate .war files on some application server. In addition to this our monolithic consists of some modules which are just used as a common code for other modules for example authorization checker, property reader etc.

In the above case, Module-1 and 2 are getting deployed as separate wars. Even these two modules can contain some duplicate code but usually, any such duplicate code is part of our shared Module-3.

Initial Steps: Identification

As an initial step, scan through the application to understand how things are getting deployed and what all code is getting duplicated. This is just an identification step which gives us an overview of the monolithic without taking things apart.

Standalone Applications: We can assume that each module will eventually be a separate application with a separate code repository. For example in our sample multi-module application, we have a module for user management and we have a module for creating tickets which can be separated into two different application with a single module into their individual code repository. Each newly created application can be further broken into smaller microservices but for now, we are just separating out the modules into separate repositories.

Shared Code: In our multi-module application, we have some modules which are shared across all the other modules like user authorization utilities, Database repository etc. Identify such shared code libraries or modules which can grow into separate services in later migration phase.

Breaking into separate applications

Once we have a plan about what all things should be taken apart we can start with the next phase. In this step, we will create separate git repositories for each standalone application which we have identified in the initial phase. In multi-module monolithic, we generally have pom hierarchies which we have to remove and create a standalone pom for individual application by removing all pom hierarchies and adding the dependencies directly into new applications.

For the shared code which we have identified, we can create a separate repository which will first be distributed as a jar for other applications. Add these shared codes as jar into the newly created application pom files. In the later phase, we can put network interfaces in front of the business logic of these jars to create multiple domain specific services.

Releasing newly created individual applications

To validate if all your new code repositories have been separated out correctly release all your applications wars using new standalone repositories. This step proves to be an important checkpoint in the whole journey. By the end of this step, we can be sure that all the modules in a multi-module monolithic application are now a separate application and we also have separate code for shared libraries which are still being distributed as jars.

NOTE: GOING FORWARD WE WILL NOT REFER THEM AS MODULES AS NOW EACH MODULE IS A SEPARATE APPLICATION AND SO WILL BE REFERRED TO AS APPLICATION ONLY.

Checkpoint: 1

At this point, we have created many smaller applications out of our one big multi-module monolithic and have achieved some separation of concern. Now we can drill down into each application to identify and start creating services. At the end of this step, we no longer have a multi-module application. All our applications now consist of single modules and are stored in separate repositories as individual applications.

Identify Services(Both in applications and in shared code)

Till this point, there was one to one relation between the original module and the new application. In this step pick any two applications and check if you can find some code which is common in the two applications. Look out for things like business rules, database updates, validators etc. Keep adding more and more applications into your analysis and create a document for identified shared services. Your shared service should ideally be aligned to a domain. For example, if you see some duplicate code in multiple application for user updates then eventually this can go into a user service. Similarly, duplicate code for ticket creation will go into ticket service.

Developing Services

Once we have identified the candidates for service creation we can develop the functionality as a separate service which can communicate with other systems using a protocol like HTTP, AMQP etc. We can’t just deploy a microservices like we were deploying our monolithic. We need a lot more tooling and support systems to make up the full microservices ecosystem. I will be taking up infrastructure and security related stuff after this section and so for a practical migration effort, you should be reading the complete document even before touching your code.

Replacing common code by services in each application

Once we have a service ready for the common functionality we can then remove the common code from the application and call the newly created new service for the same functionality.

Repeat until no more common code is duplicated

This process is not something which can be done in a single iteration. In each iteration, we can replace some common code with the service we have developed for the same. It is very important to understand that we should restrict ourselves to make small but iterative changes so that we are not disrupting the whole ecosystem. With small and iterative changes we can keep improving our system and make it more adaptive to the changes in the next iteration.

Converting server based app into FE + BFF app

We can separate our server-based application(JSP+Servlet) into two components, a front end part with session management and the backend for frontend(BFF) API which has all the APIs needed for our front end application. A typical BFF is a special case of API Gateway where your client will call the API of the gateway rather than calling the microservice directly. BFF is responsible for one client and thus has a very limited scope. A BFF still calls other microservice and is also deployed just like a service. It helps us in creating a complete application just by using services which are calling more services downstream. BFF is the place to check for user privileges and other client-specific business rules. A practical example of this can be using Angular with REST API.

Checkpoint: 2

After many iterations of service development and replacement, we have our microservices set up. All the steps which are mentioned in the later part of the section should be taken into consideration even before you have started splitting your monolithic application. We can’t have a micro-service if we do not already have the right tooling to support the new ecosystem.

Security

With this new architecture, our security needs have changed and have become more complex as all the components are now distributed. I have summarized how we solved this issue using OIDC and JSON Web Tokens(JWT).

User Authentication

A user authenticates using username and password and Identity and Access Management service issues a JWT to be used for downstream authentication. This JWT is a short-lived token which will expire after some time and our application should be able to get a new token for continuous user interaction.

Service Accounts

We can create service accounts to be used by a service which does not need a user to validate itself. These service tokens can use something like certificates to get tokens and can be used to authenticate stuff like batch jobs, housekeeping services.

UI to BFF Authentication

The frontend passes JWT to BFF which validates and checks if the user is allowed to perform the operation in question. In case the user is allowed we pass the same JWT to all the downstream services which can use the same JWT for their specific authentication and authorization checks.

Service to Service Authentication

As discussed earlier we pass the same JWT to each micro-service which authenticates each and every interaction thus securing all the interactions between our services.

Scalability: Service Replication

One of the major benefit or a microservice architecture is that in theory, we can achieve infinite scalability.

Cloud-Based Systems

We can deploy one service per VM and can increase the number of instances of that service to compensate for the increased load. We can also use containers with one service per container and can increase the number of containers as per the load. In practice, we should be using a container orchestration tool like kubernetes which can increase the replication count per service to support increased load. Ideally, each component should be deployed individually as a separate process.

Non-Cloud-Based System

In a non-cloud based system, it is generally not possible to increase machines for maintaining one machine per service. In this case, we can increase machine capacity and deploy multiple instances of the same service on a single machine. Still, it makes more sense to use some virtualization to get maximum benefit out of your microservices architecture and true microservice capabilities can’t be achieved on bare metal infrastructure.

Load Balancing

In both the above cases we would need efficient load balancing to distribute the load across all the available service instance of the same service. Service discovery tools like Eureka provides this feature and by default use round robin to distribute load across the instance of the service. In addition to simple load balancing, we can also balance the load by considering geolocation of the requester to call the server nearest to the caller using services like Akamai.

Web Servers for Static code

All the static content should be deployed on standalone NGINX servers. As discussed earlier we should try to use a separate code for frontend code and backend code so that we can scale the two components individually. Also when we have a separate code base for front end and backend it helps a developer to work independently. Angular, react are some of the technologies which help us using this and can call our backend services using HTTP. In case our pages are growing big we can even divide a page into smaller fragments each into its own application with different team owing different fragments.

Configuration Management

We can use tools like Spring Cloud Config Server to maintain metadata for services. It is a standalone service which holds all the data like database connections, business configuration data etc.

In case we need configuration for looking up other services we can use service discovery tools like Eureka to maintain a dynamic list of services available along with their details.

Error Handling

We can create a global exception handler like spring’s ControllerAdvice per microservice which can handle all the exceptions within a single microservices. Also, we can create an error logger service which can do post processing or errors and effectively log it for further analysis. All the microservices can then call this error service in case they have any business or technical exception.

Events for Transaction

It is tricky to achieve global transactions in a microservices architecture. In case we want two operations in two different services to be in a transaction we can use events. For example, suppose we have two create operations in two different service which needs to be a transaction. We create the entity in a pending state using the first service and then fire an event for the second create which should be in the same transaction. The other service process the event and send a success/failure event back to the first service. The first service updates the pending state or deletes the created entity(rollback) based on the response from the second service. We can use AMQP, Kafka for these event-driven architectures.

Tracking ID for logs

In microservices architecture, we sometimes have 20-30 service calls for on simple page load or user data update. It is thus very difficult to keep track of the customer interaction in the service logs. To overcome this problem we can use specialized tracking ids which can help us in tracking customer journey even if it spans multiple services and multiple cloud providers.

To do this we can generate GUIDs at the start of customer interaction. This GUID is then transferred to BFF and the BFF then transfers it to all the service which are then called downstream. This helps us in tracking the user request from FE -> BFF -> Services. In addition to this as we can be using virtualization or containerization, we would need log forwarders and aggregators like spunk or ELK which we can use to query logs from all the systems/containers within our architecture.

Performance

Few things which we can consider here are

- Try to make all your backend services stateless.

- Just by making this change we can avoid some of the most fragile technologies like

- Session Replication

- Session Stickiness

- If you notice I said that your backend service should be stateless but that does not mean that we can’t support session. We can support session but we will move it to the front end layer. Session lives on the client side and with this, we reduce a lot of load on our backend services in case we have a huge number of concurrent users.

- Just by making this change we can avoid some of the most fragile technologies like

- Use caching to minimize data reads from different services.

- As discussed earlier we can use service replication to improve service performance.

- Try moving to FE->BFF pattern. This is a major performance improvement as frontend is a separate service deployed separately on a web server and your backend is just responsible to populate data into the frontend.

Database considerations

Separate DB for Each Service

In this pattern, we maintain a separate database for each domain like user, account payments etc. This helps in loose coupling and each service will own the data which belongs to it. No other service can get that data without invoking the owner service API’s. We have three options to do this:

- Private-tables-per-service – each service owns a set of tables that must only be accessed by that service

- Schema-per-service – each service has a database schema that’s private to that service

- Database-server-per-service – each service has its own database server.

Separate DB for Reading and Writing (write master and read slaves)

This pattern is useful in cases we have an application with less write and more reads. We can improve the performance simply by adding more read slaves thus improving the scalability of our complete architecture.